-

Model-Based Machine Learning Session

Abstract

Today, thousands of scientists and engineers are applying machine learning to an extraordinarily broad range of domains, and over the last five decades, researchers have created literally thousands of machine learning algorithms. Traditionally an engineer wanting to solve a problem using machine learning must choose one or more of these algorithms to try, and their choice is often constrained by their familiarity with an algorithm, or by the availability of software implementations. Chris will talk about ‘model-based machine learning’, a new approach in which a custom solution is formulated for each new application. He will show how probabilistic graphical models, coupled with efficient inference algorithms, provide a flexible foundation for model-based machine learning, and he’ll describe several large-scale commercial applications of this framework.

Biography

Christopher Bishop is a Microsoft Technical Fellow and Director of the Microsoft Research Lab in Cambridge, UK.

He is also Professor of Computer Science at the University of Edinburgh, and a Fellow of Darwin College, Cambridge. In 2004, he was elected Fellow of the Royal Academy of Engineering, in 2007 he was elected Fellow of the Royal Society of Edinburgh, and in 2017 he was elected Fellow of the Royal Society.

At Microsoft Research, Chris oversees a world-leading portfolio of industrial research and development, with a strong focus on machine learning and AI, and creating breakthrough technologies in cloud infrastructure, security, workplace productivity, computational biology, and healthcare.

Chris obtained a BA in Physics from Oxford, and a PhD in Theoretical Physics from the University of Edinburgh, with a thesis on quantum field theory. From there, he developed an interest in pattern recognition, and became Head of the Applied Neurocomputing Centre at AEA Technology. He was subsequently elected to a Chair in the Department of Computer Science and Applied Mathematics at Aston University, where he set up and led the Neural Computing Research Group.

Chris is the author of two highly cited and widely adopted machine learning text books: Neural Networks for Pattern Recognition (1995) and Pattern Recognition and Machine Learning (2006). He has also worked on a broad range of applications of machine learning in domains ranging from computer vision to healthcare. Chris is a keen advocate of public engagement in science, and in 2008 he delivered the prestigious Royal Institution Christmas Lectures, established in 1825 by Michael Faraday, and broadcast on national television.

-

Predicting Cancer Response using In Silico Mechanistic Models

Predicting Cancer Response using In Silico Mechanistic ModelsAbstract

Cancer is a highly complex cellular state where mutations impact a multitude of signalling pathways operating in different cell types. In order to understand and fight cancer, it must be viewed as a system, rather than as a set of independent cellular activities. In this talk, I will discuss some of the progress made towards achieving such a system-level understanding using in silico mechanistic models. I will describe our studies to better understand aberrant signaling programs in leukemia, breast cancer and glioblastoma; and to identify novel combination treatments to overcome drug resistance. These computational cancer programs have been shown to improve our understanding of the mechanistic rules that drive cancers and with the help of advanced machine learning and AI will pave the way for better detection, diagnosis and personalised treatment of patients.

Biography

Jasmin Fisher is a Principal Researcher at Microsoft Research Cambridge in the Programming Principles & Tools group. She is also an Associate Professor of Systems Biology in the Department of Biochemistry at the University of Cambridge. She is a member of the Cancer Research UK Cambridge Centre, Cambridge Systems Biology Centre and the Wellcome Trust-MRC Cambridge Stem Cell Institute. In 2016 she was elected Fellow of Trinity Hall, Cambridge. In 2017 Jasmin was named one of the Top Outstanding Female Leaders in the UK Healthcare by BioBeat. In 2018 she was elected Fellow of the Royal Society of Biology.

Jasmin received her Ph.D. in Neuroimmunology from the Weizmann Institute of Science in 2003. She then started her work on the application of formal methods to biology as a postdoctoral fellow in the Department of Computer Science at the Weizmann Institute, and then continued to work on the development of novel formalisms and tools that are specifically-tailored for modelling biological processes in the School of Computer Science at the EPFL in Switzerland. In 2007, Jasmin joined the Microsoft Research Lab in Cambridge. In 2009, she was also appointed a Research Group Leader in the University of Cambridge.

Jasmin has devoted her career to develop methods for Executable Biology; her work has inspired the design of many new biological studies. She is a pioneer in using formal verification methods to analyse mechanistic models of cellular processes and disease. Her research group focuses on cutting-edge technologies for modelling molecular mechanisms of cancer and the development of novel drug therapies.

-

Fitting models to data: Accuracy, Speed, Robustness

Fitting models to data: Accuracy, Speed, RobustnessAbstract

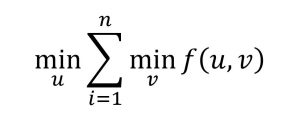

In vision and machine learning, almost everything we do may be considered to be a form of model fitting. Whether estimating the parameters of a convolutional neural network, computing structure and motion from image collections, tracking objects in video, computing low-dimensional representations of datasets, estimating parameters for an inference model such as Markov random fields, or extracting shape spaces such as active appearance models, it almost always boils down to minimizing an objective containing some parameters of interest as well as some latent or nuisance parameters. This session will describe several tools and techniques for solving such optimization problems. In particular, I will present some results on some common optimization problems in computer vision and machine learning, for example, matrix completion, 3D structure from motion, and curve and surface fitting with nonconvex robust losses, all of which are of the form

I show how two mutually contradictory strategies, namely variable projection (aka VarPro) and simultaneous joint optimization (aka “Lifting”), interact in these problems. Choosing the right strategy can yield startling improvements in real-world convergence rate or basin of convergence.

Biography

Andrew Fitzgibbon is a scientist with HoloLens at Microsoft, Cambridge, UK. He is best known for his work on 3D vision, having been a core contributor to the Emmy-award-winning 3D camera tracker “boujou” and Kinect for Xbox 360, but his interests are broad, spanning computer vision, graphics, machine learning, and occasionally a little neuroscience.

He has published numerous highly-cited papers and received many awards for his work, including ten “best paper” prizes at various venues, the Silver medal of the Royal Academy of Engineering, and the BCS Roger Needham award. He is a fellow of the Royal Academy of Engineering, the British Computer Society, and the International Association for Pattern Recognition. Before joining Microsoft in 2005, he was a Royal Society University Research Fellow at Oxford University, having previously studied at Edinburgh University, Heriot-Watt University, and University College, Cork.

-

Recurrent Neural Networks and Graph Neural Networks

Recurrent Neural Networks and Graph Neural NetworksAbstract

A basic introduction to deep learning on sequences and graphs.

Biography

Alex Gaunt is a researcher in the Machine Intelligence and Perception group at Microsoft Research Cambridge.

His research focuses on building machine learning systems that can discover and reason about program-like structure in data. This has application in automatic program synthesis, where we want to write programs given only a handful of example inputs and corresponding outputs, and also in discovery of interpretable algorithmic processes from experimental observations of complex systems.

He obtained his PhD in Physics from the University of Cambridge under the supervision of Prof. Zoran Hadzibabic where he performed experimental studies of non-equilibrium dynamics of Bose-Einstein condensates.

-

AI Through the Looking Glass

AI Through the Looking GlassAbstract

Artificial Intelligence is set to transform society in the coming decades in ways that have long been predicted by science fiction writers but are only now becoming feasible.While AI is still a long way from being as powerful as the human brain, many machines can now outperform human beings, particularly when it comes to analysing large amounts of data. This will lead to many jobs being replaced by automated processes and machines.

As with all major technological revolutions, such advancements bring with it unexpected opportunities and challenges for society with a need to consider the ethical, accountability and diversity impacts.

In this talk, Wendy Hall will lay out why we need to take a socio-technical approach to every aspect of the evolution of AI in society, to ensure that we all reap the benefits of AI and protect ourselves as much as possible from applications of AI that might be harmful to society.

As Alice found when she went through the looking glass, everything is not always what it first appears to be.

Biography

Dame Wendy Hall, DBE, FRS, FREng is Regius Professor of Computer Science, Pro Vice-Chancellor (International Engagement) at the University of Southampton and is the Executive Director of the Web Science Institute.

With Sir Tim Berners-Lee and Sir Nigel Shadbolt she co-founded the Web Science Research Initiative in 2006 and is the Managing Director of the Web Science Trust, which has a global mission to support the development of research, education and thought leadership in Web Science.

She became a Dame Commander of the British Empire in the 2009 UK New Year’s Honours list, and is a Fellow of the Royal Society.

She has previously been President of the ACM, Senior Vice President of the Royal Academy of Engineering, a member of the UK Prime Minister’s Council for Science and Technology, was a founding member of the European Research Council and Chair of the European Commission’s ISTAG 2010-2012 and was a member of the Global Commission on Internet Governance.

She is currently a member of the World Economic Forum’s Global Futures Council on the Digital Economy, and is co-Chair of the UK government’s AI Review, which was published in October 2017.

-

Intelligent Machines

Intelligent MachinesAbstract

Intelligent Machines compares the biological intelligent machines, i.e. humans, with artificial intelligent machines. The key important issue is the choice of human oriented goals as AIs are becoming more powerful.

Biography

In 2015, Hermann was awarded a KBE for services to Engineering and Industry.

Serial entrepreneur and co-founder of Amadeus Capital Partners, Dr Hermann Hauser CBE has wide experience in developing and financing companies in the information technology sector. He co-founded a number of high-tech companies including Acorn Computers which spun out ARM, E-trade UK, Virata and Cambridge Network. Subsequently, Hermann became vice president of research at Olivetti. During his tenure at Olivetti, he established a global network of research laboratories. Since leaving Olivetti, Hermann has founded over 20 technology companies. In 1997, he co-founded Amadeus Capital Partners. At Amadeus, he invested in CSR, Solexa, Icera, Xmos and Cambridge Broadband.

Hermann is a Fellow of the Royal Society, the Institute of Physics and of the Royal Academy of Engineering and an Honorary Fellow of King’s College, Cambridge. In 2001, he was awarded an Honorary CBE for ‘innovative service to the UK enterprise sector’. In 2004, he was made a member of the Government’s Council for Science and Technology and in 2013, he was made a Distinguished Fellow of BCS, the Chartered Institute for IT.

Hermann has honorary doctorates from the Universities of Loughborough, Bath, Anglia Ruskin and The University of Strathclyde.

-

Learning to Play: The Multi-Agent Reinforcement Learning in Malmo (MARLO) Competition

Learning to Play: The Multi-Agent Reinforcement Learning in Malmo (MARLO) CompetitionAbstract

The Multi-Agent Reinforcement Learning in Malmo (MARLO) competition is a new challenge that fosters research on Multi-Agent Reinforcement Learning in multi-player games. The problem of learning in multi-agent settings is one of the fundamental problems in artificial intelligence research and poses unique research challenges. For example, the presence of independently learning agents can result in non-stationarity, and the presence of adversarial agents can hamper exploration and consequently the learning progress. The MARLO competition provides a test bed that requires participants’ agents to collaborate and compete with others’ submitted agents – accelerating progress towards tackling these challenging goals. This talk provides gives an overview of the competition, and shows how to get started on building your own competition agents.

Biography

Katja Hofmann is a researcher at Microsoft Research Cambridge. As part of the Machine Intelligence and Perception group, she is research lead of Project Malmo. Before joining Microsoft Research, Katja received her PhD in Computer Science from the University of Amsterdam, her MSc in Computer Science from California State University, and her BSc in Computer Science from the University of Applied Sciences in Dresden, Germany. Katja’s main research goal is to develop interactive learning systems. Her dream is to develop AIs that learn to collaborate with human players in Minecraft.

-

An Oncologist’s Guide to using Machine Learning for Cancer Therapy

An Oncologist’s Guide to using Machine Learning for Cancer TherapyAbstract

For those of us born after 1970, one in two of us will develop cancer at some point in our lifetimes. Radiation therapy is a useful anti-cancer treatment that is used in about 50% of all patients who develop cancer. Join me as I discuss practical ways in which I use machine learning to improve outcomes for patients undergoing radiation therapy.

Biography

Dr Raj Jena is a Consultant Clinical Oncologist for Cambridge University Hospitals NHS Foundation Trust, visiting fellow in the Faculty of Engineering and Physical Science at the University of Surrey, and a visiting scientist at the European Centre for Nuclear Research (CERN). His research interests are in the application of advanced imaging techniques and radiotherapy treatment to improve outcomes for patients with central nervous system tumours.

-

The Human Consequences of AI

The Human Consequences of AIAbstract

Powerful AI systems are being developed that have the potential to transform people’s lives and computer scientists are increasingly being required to reflect on the possible consequences of their research. In this session we want to challenge participants to think about this issue, not just as one of policy, but as a space within which computer science could do its best work, by embracing a multi-disciplinary approach to the work we do and focussing on new tools and techniques that may help make the issue become more tractable. Marina Jirotka will open the session with a call for participants to put responsible innovation at the heart of their work; Ewa Luger will draw on her rich field work to comment on the diminishing space that makes us uniquely human; and finally Simone Stumpf will present practical examples that help us create AI systems that are truly intelligible to people. We’ll close with what we hope will be a lively discussion.

Biography

Marina Jirotka is Professor of Human Centred Computing in the Department of Computer Science at the University of Oxford. Her expertise involves co-producing user and community requirements and human computer interaction, particularly for collaborative systems (CSCW). She has been at the forefront of recent work in Responsible Innovation (RI) in the UK and the European Union. She leads an interdisciplinary research group investigating the responsible development of technologies that are more responsive to societal acceptability and desirability. Her current projects involve a range of topics in RI: she leads the Responsible Innovation initiative for Quantum Technologies; Co-PI on EPSRC Digital Economy TIPS project, Emancipating Users Against Algorithmic Biases for a Trusted Digital Economy (UnBias); PI on EPSRC Digital Economy TIPS project Rebuilding and Enhancing Trust in Algorithms (ReEnTrust); and co-director of an Observatory for Responsible Research and Innovation in ICT (ORBIT) that will provide RRI services to ICT researchers. Marina is a Chartered IT Professional of the British Computer Society and sits on the ICT Ethics Specialist Group committee. She has published widely in international journals and conferences on e-Research, Human Computer Interaction, Computer Supported Cooperative Work and Requirements Engineering.

-

How to Write a Great Research Paper

How to Write a Great Research PaperHow to Give a Great Research Talk

Abstract

Writing papers and giving talks are key skills for any researcher, but they aren’t easy. In this pair of presentations, I’ll describe simple guidelines that I follow for writing papers and giving talks, which I think may be useful to you too. I don’t have all the answers-far from it-so I hope that the presentation will evolve into a discussion in which you share your own insights, rather than a lecture.

Biography

Simon Peyton Jones, MA, MBCS, CEng, graduated from Trinity College Cambridge in 1980. After two years in industry, he spent seven years as a lecturer at University College London, and nine years as a professor at Glasgow University, before moving to Microsoft Research in 1998. His main research interest is in functional programming languages, their implementation, and their application. He has led a succession of research projects focused around the design and implementation of production-quality functional-language systems for both uniprocessors and parallel machines. He was a key contributor to the design of the now-standard functional language Haskell, and is the lead designer of the widely used Glasgow Haskell Compiler (GHC). He has written two textbooks about the implementation of functional languages. More generally, he is interested in language design, rich type systems, software component architectures, compiler technology, code generation, runtime systems, virtual machines, garbage collection, and more. He is particularly motivated by direct use of principled theory to practical language design and implementation-that’s one reason he loves functional programming so much. He is also keen to apply ideas from advanced programming languages to mainstream settings.

-

Unity ML-Agents: A flexible platform for Deep RL research

Abstract

Unity ML-Agents is an open source toolkit that enables machine learning developers and researchers to train agents in realistic, complex 3D environments with decreased technical barriers. This session will walk through the design of the platform, and describe the ways in which Deep RL algorithms can be uniquely challenged by the environments made possible via these design choices. We will walk through the environment creation process, showing some of the example tasks we have created. In doing so, we will demonstrate the way in which a rapid iteration between environment algorithm design can ultimately improve both

Biography

Arthur Juliani is a Machine Learning Engineer at Unity Technologies, and the lead developer of Unity ML-Agents. An active blogger on topics such as Deep Learning, Reinforcement Learning, and Cognitive Science, his Medium blog has reached over 100,000 readers. Arthur is also a contributor to publications such as O’Reilly, and Hanbit Media.

-

Using Ethical Principles to Inform Industry Solutions

Abstract

There are increasing discussions about whether and how tech companies think about ethics when designing solutions, especially related to Artificial Intelligence. The reality is that everything we build is designed with a set of ethics, whether or not we thought about them. In this session, we will discuss Microsoft’s “enduring values”, the company’s approach to supporting more ethical product development, and what that means for a product team working on building solutions for enterprise customers.

Biography

Jacquelyn Krones is Principal for Ethics in the AI Perceptions and Mixed Reality Group at Microsoft. Her mission is to help teams uncover, prioritise, and address ethical challenges to align solutions with Microsoft’s values. She has over 20 years experience in technology companies from start ups to large enterprises, and enjoys uncovering deep insights into people’s needs, desires and motivations to position technology most helpfully within social systems. She has a Master’s in Social Psychology and her academic research included ingroup bias, tokenism and heterosexual attraction. She has held a variety of volunteer and board positions in non-profits related to teen homelessness, police accountability and supporting women in need.

-

The Human Consequences of AI

The Human Consequences of AIAbstract

Powerful AI systems are being developed that have the potential to transform people’s lives and computer scientists are increasingly being required to reflect on the possible consequences of their research. In this session we want to challenge participants to think about this issue, not just as one of policy, but as a space within which computer science could do its best work, by embracing a multi-disciplinary approach to the work we do and focussing on new tools and techniques that may help make the issue become more tractable. Marina Jirotka will open the session with a call for participants to put responsible innovation at the heart of their work; Ewa Luger will draw on her rich field work to comment on the diminishing space that makes us uniquely human; and finally Simone Stumpf will present practical examples that help us create AI systems that are truly intelligible to people. We’ll close with what we hope will be a lively discussion.

Biography

Ewa Luger is a Chancellor’s Fellow at the University of Edinburgh, where she is aligned to both the school of Informatics and the school of Design. Her research explores applied ethical issues within the sphere of machine intelligence and data-driven systems. This encompasses practical considerations such as data governance, consent, privacy, explainability and how intelligent networked systems might be made intelligible to the user, with a particular interest in distribution of power and spheres of digital exclusion.

-

Causality I and II

Causality I and IIAbstract

In the field of causality we want to understand how a system reacts under interventions (e.g. in gene knock-out experiments). These questions go beyond statistical dependences and can therefore not be answered by standard regression or classification techniques. In this tutorial you will learn about the interesting problem of causal inference and recent developments in the field. No prior knowledge about causality is required.

Part 1: We introduce structural causal models and formalize interventional distributions. We define causal effects and show how to compute them if the causal structure is known.Part 2: We present three ideas that can be used to infer causal structure fromdata: (1) causal discovery by experimentation (e.g., randomized controlled trials, A/B-testing, (2) finding (conditional) independences in the data, (3) restricting structural equation models and (4) exploiting the fact that causal models remain invariant in different environments.Part 3: If time allows, we show how causal concepts could be used in more classical machine learning problems.

Biography

Joris M. Mooij studied mathematics and physics and received his PhD degree with honors from the Radboud University Nijmegen (the Netherlands) in 2007. His PhD research concerned approximate inference in graphical models. During his postdoc at the Max Planck Institute for Biological Cybernetics in Tübingen (Germany), his research focus shifted towards causality. In 2017 he became Associate Professor at the University of Amsterdam, the Netherlands. He has obtained several personal research grants (NWO VENI, NWO VIDI, ERC Starting Grant) to fund his own research group on causality. He has won several awards for his work.

-

Introduction to Probabilistic Deep Learning

Introduction to Probabilistic Deep LearningAbstract

Deep learning has made significant advances in supervised machine learning. Recent probabilistic deep learning models extend the reach of deep learning technology in two ways: 1. Learning from unsupervised data, and 2. Allowing for calibrated uncertainty of predictions. In this tutorial lecture we will look at the main classes of probabilistic deep learning models, including models such as variational autoencoders and generative adversarial networks.

Biography

Sebastian Nowozin is a principal researcher at Microsoft Research managing the Machine Intelligence and Perception group. He works on models and algorithms for artificial intelligence. Previous to joining Microsoft Research he received his PhD in 2009 from the Max Planck Institute for Biological Cybernetics and the Technical University of Berlin. Sebastian is associate editor of IEEE TPAMI and the Journal of Machine Learning Research and an area chair at machine learning and computer vision conferences (NIPS, ICML, CVPR, ICCV).

-

Security and Privacy in Machine Learning

Security and Privacy in Machine LearningAbstract

There is growing recognition that machine learning (ML) exposes new security and privacy vulnerabilities in software systems, yet the technical community’s understanding of the nature and extent of these vulnerabilities remains limited but expanding. In this talk, I explore the threat model space of ML algorithms, and systematically explore the vulnerabilities resulting from the poor generalization of ML models when they are presented with inputs manipulated by adversaries. This characterization of the threat space prompts an investigation of defenses that exploit the lack of reliable confidence estimates for predictions made. In particular, we introduce a promising new approach to defensive measures tailored to the structure of deep learning. Through this research, we expose connections between the resilience of ML to adversaries, model interpretability, and training data privacy.

Biography

Nicolas Papernot earned his PhD in Computer Science and Engineering working with Professor Patrick McDaniel at the Pennsylvania State University. His research interests lie at the intersection of computer security, privacy and machine learning. He is supported by a Google PhD Fellowship in Security and received a best paper award at ICLR 2017. He is also the co-author of CleverHans, an open-source library widely adopted in the technical community to benchmark machine learning in adversarial settings. In 2016, he received his M.S. in Computer Science and Engineering from the Pennsylvania State University and his M.S. in Engineering Sciences from the Ecole Centrale de Lyon.

-

Causality I and II

Abstract

In the field of causality we want to understand how a system reacts under interventions (e.g. in gene knock-out experiments). These questions go beyond statistical dependences and can therefore not be answered by standard regression or classification techniques. In this tutorial you will learn about the interesting problem of causal inference and recent developments in the field. No prior knowledge about causality is required.

Part 1: We introduce structural causal models and formalize interventional distributions. We define causal effects and show how to compute them if the causal structure is known.Part 2: We present three ideas that can be used to infer causal structure from data: (1) causal discovery by experimentation (e.g., randomized controlled trials, A/B-testing, (2) finding (conditional) independences in the data, (3) restricting structural equation models and (4) exploiting the fact that causal models remain invariant in different environments.Part 3: If time allows, we show how causal concepts could be used in more classical machine learning problems.

Biography

Jonas is an associate professor in statistics at the University of Copenhagen. Previously, Jonas has been a group leader at the MPI for Intelligent Systems in Tuebingen and a Marie Curie fellow at the Seminar for Statistics, ETH Zurich. He studied Mathematics at the University of Heidelberg and the University of Cambridge. He is interested in inferring causal relationships from observational data and works both on theory, methodology, and applications. His work relates to areas as computational statistics, graphical models, independence testing or high-dimensional statistics.

-

Welcome to AI Summer School 2018

Welcome to AI Summer School 2018Biography

Scarlet Schwiderski-Grosche is a Principal Research Program Manager at Microsoft Research Cambridge, working for Microsoft Research Outreach in the Europe, Middle East, and Africa (EMEA) region. She is responsible for academic research partnerships in the region, especially for the Joint Research Centres with Inria in France and EPFL and ETH Zurich in Switzerland. Scarlet has a PhD in Computer Science from University of Cambridge. She was in academia for almost 10 years before joining Microsoft in March 2009. In academia, she worked as Lecturer in Information Security at Royal Holloway, University of London.

-

The Human Consequences of AI

The Human Consequences of AIAbstract

Powerful AI systems are being developed that have the potential to transform people’s lives and computer scientists are increasingly being required to reflect on the possible consequences of their research. In this session we want to challenge participants to think about this issue, not just as one of policy, but as a space within which computer science could do its best work, by embracing a multi-disciplinary approach to the work we do and focussing on new tools and techniques that may help make the issue become more tractable. Marina Jirotka will open the session with a call for participants to put responsible innovation at the heart of their work; Ewa Luger will draw on her rich field work to comment on the diminishing space that makes us uniquely human; and finally Simone Stumpf will present practical examples that help us create AI systems that are truly intelligible to people. We’ll close with what we hope will be a lively discussion.

Biography

Dr Simone Stumpf is a Senior Lecturer at City, University of London in the Centre for HCI Design. She has a long-standing research focus on end-user interactions with machine learning systems and has authored over 60 publications in this area. She organised workshops on End-user Interactions with Intelligent and Autonomous Systems at CHI 2012 and on Explainable Smart Systems at IUI 2018. Her current projects include designing user interfaces for smart heating systems, and smart home self-care systems for people with Dementia or Parkinson’s disease. She is also interested in personal information management and end-user development to empower all users to use intelligent machines effectively.

-

AI in Healthcare

Abstract

The pace of technology and developments in of artificial intelligence promises revolutionary improvements in health. The reality is that improving healthcare is complex due to the myriad of people, processes, and systems that must work together. We are at a pivotal moment where the convergence of seamless communication, artificial intelligence, personalised medicine, and secure, trusted, hyper-scale cloud computing is giving us hope for a brighter future. We will discuss how Microsoft is driving innovation by designing AI health experiences that are centred around empowering patients, empowering clinicians, and empowering healthcare providers. http://democratizing-ai-in-health.com/

Biography

Dr Kenji Takeda is Global Director of the Azure for Research program, and Healthcare AI partnerships lead at Microsoft Research Cambridge. He is working with researchers worldwide to take best advantage of cloud computing and data science. His work in the healthcare AI team is to develop collaborations that help advance the state of the art in applying AI across different healthcare settings.He has extensive experience in cloud computing, high performance and high productivity computing, data science, scientific workflows, scholarly communication, engineering and educational outreach. He has a passion for developing novel computational approaches to tackle fundamental and applied problems in science, engineering, and healthcare. He is a visiting industry fellow at the Alan Turing Institute and visiting associate professor at the University of Southampton, UK.

-

How to Build a Deep Learning Framework

How to Build a Deep Learning FrameworkAbstract

The goal of this talk is to cover fundamental concepts in deep learning by actually building a deep learning framework using just python and numpy. Keywords: layers, nonlinearity, backpropagation, weight initialization, optimization.

Biography

Ryota Tomioka is a researcher in the Machine Intelligence and Perception group since November, 2015. He was a research assistant professor at Toyota Technological Institute at Chicago and before that was an assistant professor at Department of Mathematical Informatics, University of Tokyo.Ryota is interested in both theory and application of (any mix of) machine learning, tensor decomposition, and neural networks.

-

Automating the Design of Predictive Models for Clinical Risk and Prognosis

Automating the Design of Predictive Models for Clinical Risk and PrognosisAbstract

In this talk I will show how we can use data science and machine learning to create models that assist diagnosis, prognosis and treatment. Existing models suffer from two kinds of problems. Statistical models that are driven by theory/hypotheses are easy to apply and interpret but they make many assumptions and often have inferior predictive accuracy. Machine learning models can be crafted to the data and often have superior predictive accuracy but they are often hard to interpret and must be crafted for each disease … and there are a lot of diseases. I will discuss a suite of new methods that is able to make machine learning itself do both the crafting and interpreting. For medicine, this is a complicated problem because missing data must be imputed, relevant features/covariates must be selected, and the most appropriate classifier(s) must be chosen. Moreover, there is no one “best” imputation algorithm or feature processing algorithm or classification algorithm; some imputation algorithms will work better with a particular feature processing algorithm and a particular classifier in a particular setting. To deal with these complications, we need an entire pipeline. Because there are many pipelines we need a machine learning method for this purpose, and this is exactly what AutoPrognosis is: an automated process for creating a particular pipeline for each particular setting. Using a variety of clinical datasets (from cardiovascular disease to cystic fibrosis and from breast cancer to dementia) I show that AutoPrognosis achieves performance that is significantly superior to existing clinical approaches and statistical and machine learning methods.

Biography

Professor van der Schaar is MAN Professor at the University of Oxford and Turing Faculty Fellow of The Alan Turing Institute, where she leads the effort on Machine Learning for Medicine. Prior to this, she was a Chancellor’s Professor at UCLA. She is an IEEE Fellow. She has received the Oon Prize on Preventative Medicine from the University of Cambridge (2018). She has also been the recipient of an NSF Career Award, 3 IBM Faculty Awards, the IBM Exploratory Stream Analytics Innovation Award, the Philips Make a Difference Award and several best paper awards, including the IEEE Darlington Award. She holds 33 granted USA patents. Her current research focus is on machine learning, artificial intelligence and data science for medicine.

-

Transparent AI using Trusted Hardware

Transparent AI using Trusted HardwareAbstract

We are increasingly building AI systems that rely on large amounts of sensitive data to train sophisticated models. Unfortunately, such systems are susceptible to a large number of attacks, ranging from data breaches and covert channels to the lack of privacy, transparency and robustness. In this talk, I will describe the role trusted hardware can play in building AI applications that are offer strong security guarantees and give users full visibility and control over how their data is used.

Biography

I am a researcher with the Systems and Networking group at Microsoft Research. I am broadly interested in secure and robust systems. I graduated from the department of Computer Science and Automation at the Indian Institute of Science where I worked on efficient and accurate profiling and performance modelling techniques.

-

Cooperative Deep Multi-Agent Reinforcement Learning

Cooperative Deep Multi-Agent Reinforcement LearningAbstract

Many real-world problems, such as network packet routing and the coordination of autonomous vehicles, are naturally modelled as cooperative multi-agent systems. In this talk, I overview some of the key challenges in developing reinforcement learning methods that can efficiently learn decentralised policies for such systems. These challenges include multi-agent credit assignment, multi-agent exploration, and representing and learning complex joint value functions. I also briefly describe several multi-agent learning algorithms proposed in my lab to address these challenges. Finally, I present results evaluating these algorithms in the testbed of StarCraft and StarCraft II unit micromanagement.

Biography

Shimon Whiteson is an associate professor in the Department of Computer Science at the University of Oxford, and a tutorial fellow at St. Catherine’s College. His research focuses on artificial intelligence, with a particular focus on machine learning and decision-theoretic planning. In addition to theoretical work on these topics, he has in recent years also focused on applying them to practical problems in robotics and search engine optimisation. He studied English and Computer Science at Rice University before completing a doctorate in Computer Science at the University of Texas at Austin in 2007. He then spent eight years as an Assistant and then an Associate Professor at the University of Amsterdam before joining Oxford as an Associate Professor in 2015. He was awarded an ERC Starting Grant from the European Research Council in 2014 and a Google Faculty Research Award in 2017.