What is MindJourney?

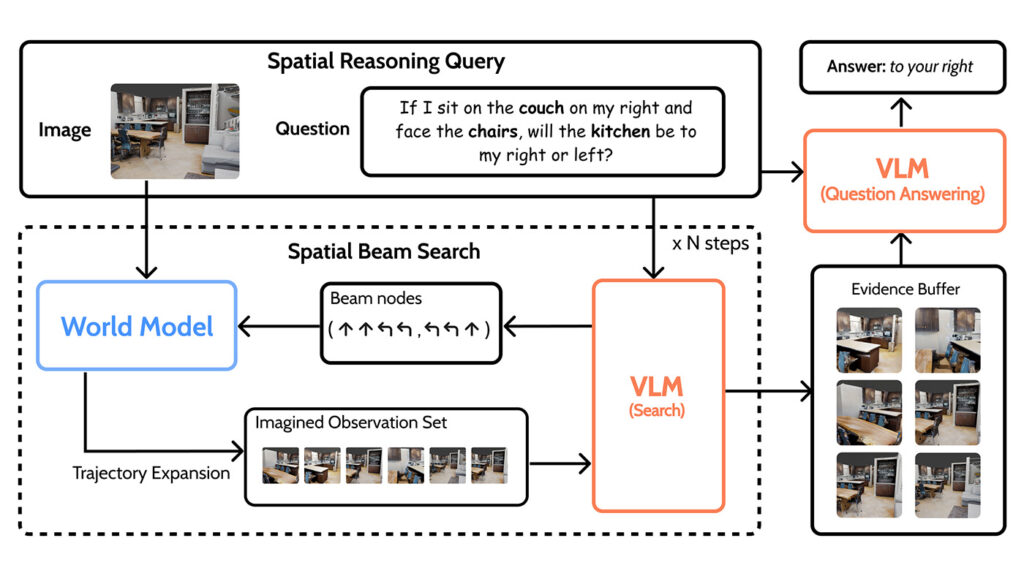

MindJourney is a framework that equips AI agents with a “simulation loop” to explore hypothetical 3D viewpoints before answering spatial reasoning questions—tackling a core limitation of vision-language models (VLMs), which recognize objects well in 2D images but struggle to infer the interactive 3D world behind them. Given a spatial reasoning query, a world model (a camera-controllable video generator) renders candidate novel views along short action sequences; a Spatial Beam Search steers exploration toward the most promising trajectories; and an off-the-shelf VLM ranks and integrates the most informative observations to produce an answer—without extra training. The approach yields stronger performance on 3D spatial-reasoning benchmarks and points toward safer, more capable embodied agents that can reason beyond the visible frame, with potential applications in robotics, smart homes, and accessibility.

Acknowledgements

The project is by Yuncong Yang (Research Intern), Reuben Tan (Senior Researcher), Swadheen Shukla (Principal Program Manager), and Jianfeng Gao (Distinguished Scientist). We thank all the external collaborators: Jiageng Liu (University of Massachusetts Amherst), Zheyuan Zhang (JHU), Siyuan Zhou (Hong Kong University of Science and Technology), Jianwei Yang (work done at MSR), Yilun Du (Harvard), and Chuang Gan (University of Massachusetts Amherst).