A Practical Guide to Neural Machine Translation

- Jacob Devlin | Microsoft

In the last two years, attentional-sequence-to-sequence neural models have become the state-of-the-art in machine translation, far surpassing the accuracy phrasal translation systems of in many scenarios. However, these Neural Machine Translation (NMT) systems are not without their difficulties: training a model on a large-scale data set can often take weeks, and they are typically much slower at decode time than a well-optimized phrasal system. In addition, robust training of these models often relies on particular ‘recipes’ that are not well-explained or justified in the literature. In the talk, I will describe a number of tricks and techniques to substantially speed up training and decoding of large-scale NMT systems. These techniques – which vary between algorithmic and engineering-focused – reduced the time required to train a large-scale NMT from two weeks to two days, and improved the decoding speed to match that of a well-optimized phrasal MT system. In addition, I will attempt to give empirical and intuitive justification for many of the choices made regarding architecture, optimization, and hyperparameters. Although this talk will primarily focus on NMT, the techniques described here should generalize to a number of other models based on sequence-to-sequence and recurrent neural networks, such as caption generation and conversation agents.

-

-

Casey Anderson

-

Jacob Devlin

Researcher

-

-

系列: Microsoft Research Talks

-

Decoding the Human Brain – A Neurosurgeon’s Experience

- Dr. Pascal O. Zinn

-

-

-

-

-

-

Challenges in Evolving a Successful Database Product (SQL Server) to a Cloud Service (SQL Azure)

- Hanuma Kodavalla,

- Phil Bernstein

-

Improving text prediction accuracy using neurophysiology

- Sophia Mehdizadeh

-

Tongue-Gesture Recognition in Head-Mounted Displays

- Tan Gemicioglu

-

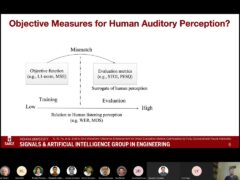

DIABLo: a Deep Individual-Agnostic Binaural Localizer

- Shoken Kaneko

-

-

-

-

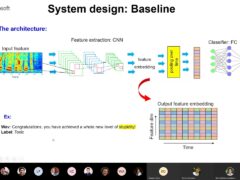

Audio-based Toxic Language Detection

- Midia Yousefi

-

-

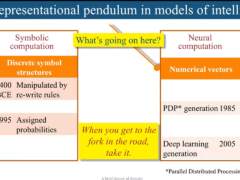

From SqueezeNet to SqueezeBERT: Developing Efficient Deep Neural Networks

- Forrest Iandola,

- Sujeeth Bharadwaj

-

Hope Speech and Help Speech: Surfacing Positivity Amidst Hate

- Ashique Khudabukhsh

-

-

-

Towards Mainstream Brain-Computer Interfaces (BCIs)

- Brendan Allison

-

-

-

-

Learning Structured Models for Safe Robot Control

- Subramanian Ramamoorthy

-