A Cross-modal Audio Search Engine based on Joint Audio-Text Embeddings

- Benjamin Elizalde | Carnegie Mellon University

Ad-hoc audio clips, such as those from smart speakers, social media apps, security cameras and podcasts, are being recorded and shared online on a daily basis. For a variety of applications, it is important to be able to search effectively through these recordings. Web-based multimedia search engines – that independently index content or textual tags — are not suitable for ad-hoc audio recordings. This is because of the absence of reliable human or machine-generated tags, and low specificity of audio content in such recordings.

In this work, we propose to connect audio and text modalities through a joint-embedding framework that allows the two modalities to exchange semantic information with each other within a shared latent space. Thus, we enable content- and text-based features associated with ad-hoc audio recordings to be mapped together and compared directly for cross-modal search and retrieval. We also show that these jointly-learnt embeddings outperform solo embeddings of any one modality. Thus, our results break ground for a cross-modal Audio Search Engine that permits searching through ad-hoc recordings with either text or audio queries.

Speaker Details

Benjamin Elizalde is a PhD student at Carnegie Mellon University under the supervision of Prof. Bhiksha Raj. His current research focuses on Machine Learning for Audio. Previously, Benjamin worked as a Staff Researcher at ICSI-UC Berkeley in the Audio & Multimedia lab.

-

-

Shuayb Zarar

Principal Applied Scientist Manager

-

-

Series: Microsoft Research Talks

-

Decoding the Human Brain – A Neurosurgeon’s Experience

- Dr. Pascal O. Zinn

-

-

-

-

-

-

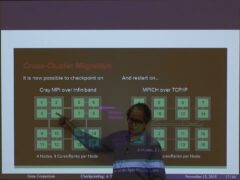

Challenges in Evolving a Successful Database Product (SQL Server) to a Cloud Service (SQL Azure)

- Hanuma Kodavalla,

- Phil Bernstein

-

Improving text prediction accuracy using neurophysiology

- Sophia Mehdizadeh

-

Tongue-Gesture Recognition in Head-Mounted Displays

- Tan Gemicioglu

-

DIABLo: a Deep Individual-Agnostic Binaural Localizer

- Shoken Kaneko

-

-

-

-

Audio-based Toxic Language Detection

- Midia Yousefi

-

-

From SqueezeNet to SqueezeBERT: Developing Efficient Deep Neural Networks

- Forrest Iandola,

- Sujeeth Bharadwaj

-

Hope Speech and Help Speech: Surfacing Positivity Amidst Hate

- Ashique Khudabukhsh

-

-

-

Towards Mainstream Brain-Computer Interfaces (BCIs)

- Brendan Allison

-

-

-

-

Learning Structured Models for Safe Robot Control

- Subramanian Ramamoorthy

-