About the Tools for Thought Project

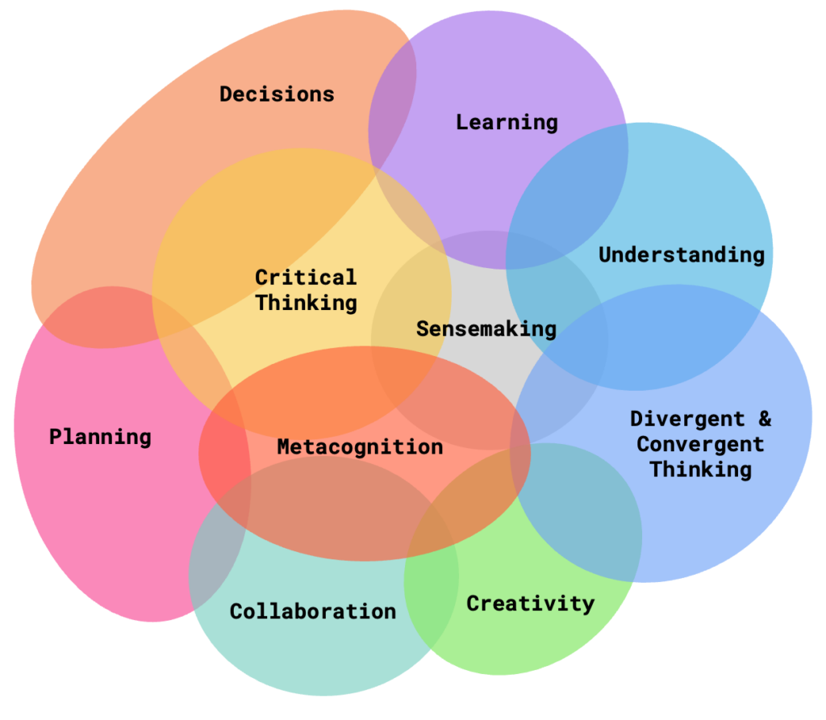

The Tools for Thought (T4T) project explores how AI might help people to think better, so that:

- as well as getting the job done, it helps us better understand and figure out the job.

- as well as creating content, it helps us think more critically and with more insight throughout an entire workflow.

- as well as automating known processes, it helps organisations predict and explore the unknown.

We explore these issues in two workstreams:

Working With Purpose: Focused on work over time, how can AI can surface people’s goal-driven reasoning, capture it as an AI input, and harness it to support and explore workflows.

Thinking By Doing: Focused on work in the moment, how can AI can deepen the quality of critical and creative thinking as people consume and create?

News

- Call for Papers: The Tools for Thought community is developing a 2026 HCI Journal Special Issue (opens in new tab). Proposals are due October 15, 2025.

What We Know

AI should support critical thinking and metacognition in knowledge work

AI should challenge, not obey

Rather than optimizing solely for productivity or user satisfaction, AI systems should trigger deeper reflection and more rigorous decision-making in people —exposing flawed reasoning, asking probing questions, and offering alternative perspectives. Strategies such as argument mapping, adversarial prompting, and adaptive friction levels should balance engagement with user comfort—too much friction will frustrate users, while too little will be ignored. Ultimately, this vision recasts AI as not just efficient tools but as catalysts for cultivating essential human skills like critical reflection and reasoning.

AI should help users monitor, evaluate, and control their thinking

AI tools not only alter tasks but also transform how users think about their own thinking, imposing new metacognitive demands. Novice users often over-rely on AI, for example, resulting in misaligned confidence and limited reflection on errors. We propose two design strategies. Systems should boost user metacognition through planning aids, feedback cues, and support for reflective questioning. Second, systems should reduce metacognitive demand by creating interfaces—such as task-specific prompting wizards and uncertainty-aware outputs—that lighten the burden. Metacognition offers a coherent framework for understanding the usability challenges posed by GenAI, enabling us to offer research and design directions to advance human-GenAI interaction.

AI reflection on meeting goals before, during, and between meetings improves effectiveness

Inefficiencies arise from misaligned mental models of meeting goals

The formative study for this series of papers explored meeting inefficiencies as a key blocker to productivity (WTI2024). Interviews with knowledge workers (N=21) found that two dominant models of meetings emerge: meetings as means to achieve explicit, outcome-driven goals versus meetings as ends in themselves for fostering discussion and connection. This goal ambiguity affects scheduling, participation, and evaluation, and existing meeting tools lack the structure to capture dynamic intentions. We recommend embedding explicit goal fields, facilitating dynamic goal negotiation, and post-meeting checks to support co-constructed meeting purposes.

AI can generate goal-driven interfaces to improve meeting effectiveness

CoExplorer is a generative-AI meeting system that turns the plain text of a calendar invite into a concrete goal, sequenced phases, and phase-specific workspaces. Before the call, it asks invitees to vote on what matters, then refines agendas and resources accordingly. During the call, it monitors speech, proposes phase transitions, and automatically tiles relevant apps, keeping discussion, reference, and task spaces synchronized. Professionals (N=26) reported that this scaffolding could reduce set-up effort and sharpen shared focus. Participants liked the adaptive layouts and the ability to spotlight disputed topics, but warned that hidden automation risks eroding trust and agency and that abrupt layout shifts can jar social norms. Recommendations include exploration of tools for helping choose what needs to be discussed synchronously versus asynchronously, making system logic legible, and requiring lightweight human-on-the-loop confirmation for critical changes.

AI-assisted reflection before meetings improves goal setting and effectiveness

We explore users’ reactions to a Meeting Purpose Assistant (MPA), an AI tool that prompts organisers and attendees to articulate intended goals, challenges, and success criteria before meetings, then returns a concise, shareable summary. Participants (N=18) using the MPA uncovered overlooked concerns, helped prioritize topics, and sometimes led to meeting restructuring or cancellations, while reducing anxiety and increasing ownership. Recommendations include integrating brief, role-sensitive reflection prompts into existing calendar and meeting tools to enhance intentionality and alignment.

AI-assisted reflection on goals during meetings can keep meetings on track

We explored how AI nudges can keep real-world meetings aligned with their goals. Professionals (N=15) compared two probes: an Ambient Visualization that passively maps emerging topics to stated goals, and an Interactive Questioning agent that actively interrupts when no goal is set or discussion drifts. Participants said clarifying goals first enabled them to judge when talk was off-track; they welcomed the unobtrusive visual cues of the passive probe, but found active pop-ups effective yet socially disruptive. Recommendations include tuning intervention strength, balancing democratic input with efficiency, and preserving user control to sustain intentional, goal-oriented meetings.

AI can surface temporal context to maintain goal continuity

We explored how AI can strengthen the goal reflection between past and future meetings. By mapping sets of meetings, we found that recurring meetings served as hubs that knit multiple projects together across varied timescales. From this insight we developed a two-axis framework that visualises “objective” time against “subjective” importance, revealing under-supported regions where tools could help. Using AI to fuse transcripts, recordings and slides, we present three prototypes: Instant Recaps (lightweight reflection moments), Adaptive Meeting Handoff (contextual summaries between back-to-back meetings), and Project Browsers (long-horizon overviews). Together they illustrate design principles of aligning support to both available time and cognitive need, blending AI outputs with human annotations, and maintaining visibility of information to carry goals forward.

Creativity with AI should focus on thoughtful curation and orchestration

AI shifts human creative labour from mere production to curation of meaning

While current debates on generative AI often reduce creativity to issues of originality, plagiarism, and uncredited remixing, this paper contends that true creativity arises from the human acts of selection, framing, and integration of machine-generated material—thus shifting creative labor from mere production of artefacts to thoughtful curation of meaning, raising essential questions of authorship and credit, and urging the design of interfaces and policies that foreground human judgment in meaning-making.

Creatives orchestrate, not automate, with AI (opens in new tab)

We explored how creative professionals integrate AI into their work and how this reshapes their roles, workflows, and expectations. Practitioners (N=31) increasingly see themselves as orchestrators—managing tasks, tools, and ideas—rather than simply executing discrete steps. They adopt AI not to automate creativity, but to support processes like brainstorming, goal refinement, and iteration. While they value the efficiency and inspiration GenAI offers, they also face challenges: articulating goals, maintaining coherence across fragmented tools, and aligning outputs with intent. Creatives want to retain agency throughout and, in some cases, desire emotionally aware systems that respond empathetically in high-stakes phases. Future Creativity Support Tools (CSTs) should preserve human control while enhancing creative flow, context management, and co-creation.

Conversations with multiple agents can boost ideation diversity

Diversity of thought is critical in creative problem-solving, yet solo workers often lack access to alternative perspectives. We introduce YES AND, a multi-agent GenAI framework simulating diverse expert perspectives to enrich individual ideation. The confidence-based turn-taking model allows for the user and the agents to interact using natural conversation, which in turn, allows for organic development of ideas using diverse viewpoints. We highlight several design trade-offs: maintaining coherence across agent contributions, balancing agent autonomy with user control, and avoiding response verbosity.

Integrating AI into Knowledge Work requires a balance of design and change management

Knowledge Workers balance AI efficiency with critical oversight

We surveyed 319 professionals across industries showing that workflows are shifting from direct task execution to overseeing and integrating AI-generated content. This change improves efficiency and reduces perceived effort but risks diminishing independent judgment—especially when users overly trust AI due to low self-confidence. Conversely, those confident in their abilities scrutinize and refine AI outputs more critically. We identify obstacles such as time pressure, limited evaluative skills, and low awareness of when deeper analysis is needed. Design enhancements like prompts for justification, skill-building feedback, and structured critical reflection tools should ensure that gains in efficiency do not come at the cost of independent, critical thought.

AI may introduce inefficiencies unless it is integrated deliberately

AI is frequently touted as a productivity enhancer, yet it can introduce inefficiencies, confusion, and added cognitive load—reflecting the “ironies of automation” from human factors research. We identify four main challenges: a shift from creative production to increased supervisory demands; workflow disruptions breaking established rhythms; frequent task interruptions from AI suggestions; and a polarization effect, where simple tasks become easier while complex ones grow more challenging. These problems stem largely from interface design flaws and skewed user expectations, rather than from the models themselves. These issues may be remedied by principles such as continuous feedback, personalization, ecological interface design, and clear task allocation to support situational awareness and reduce unintended delegation, urging a more deliberate integration of AI into workflows.

AI can scaffold decision-making processes

Provocations drive diverse AI shortlisting decisions (opens in new tab)

Shortlisting tasks in knowledge work demand structured yet subjective critical thinking, but relying solely on AI risks uncritical “mechanised convergence” of rankings. We explored whether brief textual provocations attached to AI suggestions can enhance reflective thinking. In a between-subjects study, participants (n=24) exposed to these cues engaged more in evaluation, modification, and questioning—resulting in more varied shortlists. Provocations function best as “microboundaries”—small interruptions that nudge users toward reflective cognition without derailing progress.

AI decision-support should balance delivering solutions with supporting reasoning

We compared two paradigms in AI-assisted decision-making: RecommendAI, which offers direct suggestions akin to common recommendation-based decision-support tools, and ExtendAI, which prompts users to articulate their decision rationale before providing reflective feedback. In a simulated investment scenario (N=21), ExtendAI led to more diversified portfolios and was perceived as more supportive of users’ reasoning, but demanded greater cognitive effort. Conversely, RecommendAI provided more novel ideas but was often less aligned with users’ thought processes. We recommend that AI systems should follow a balanced approach that dynamically alternates between inspiring users with new ideas and scaffolding their reasoning, reframing AI decision support as a collaborative partnership.

Prompting and evaluating AI outputs is a new skill and a UI opportunity

AI should enhance human auditing for reliable oversight

Large language models now generate complex outputs, posing challenges for accurate error detection. We introduce the concept of “co-audit,” where specialized interfaces support people assessing AI-generated content. We explore the cognitive and contextual requirements for effective co-auditing, identifying key factors like error visibility, mistake cost, audit frequency, and solution ambiguity. We stress that co-audit tools must balance detail with cognitive load and provide uncertainty signals, adjustable rationales, and timely human intervention. Their framework guides the responsible design of AI auditing systems for high-stakes domains.

AI enhances sensemaking in data analysis but demands better prompt guidance

We investigated how everyday spreadsheet users use AI to make sense of data. Working with 15 volunteers (students, analysts, engineers and others), we found that AI sped up the information-foraging loop by quickly surfacing relevant datasets, code snippets and step-by-step instructions, and enriched the sense-making loop by suggesting fresh hypotheses or analysis tactics. Yet progress often stalled when users struggled to phrase precise prompts, supply sufficient context or judge whether long, sometimes vague answers were correct. People coped by iterating queries, chasing citations, or testing AI-generated code, but they worried about errors and “hallucinations.” Future AI tools should help us craft richer prompts, flag uncertain outputs, teach verification skills and integrate smoothly with familiar apps like Excel so we can keep control while enjoying AI’s speed and creativity.

Dynamic UI for AI lowers the barrier for steering AI outputs

Effective prompting remains a major barrier for AI users, particularly when outputs must be contextual, accurate, and controllable. We explore how UI controls that focus on task decomposition can facilitate better AI outputs. We compare two middleware prototypes: one offering static, generic prompt options, and another providing dynamic, context-refined controls generated from the user’s initial prompt. In a study with 16 participants, the dynamic controls lowered barriers to providing context, improved user perception of control, and provided guidance for steering the AI. This reduces prompt iteration, but at some cost of increased cognitive load and reduced predictability. Dynamically generated UI that can be customised to context is feasible, and can empower users to shape AI behaviour outside of the chat paradigm.

AI cannot explain itself

Large language models produce fluent text but cannot introspectively explain their outputs. Instead, they generate “exoplanations”—post hoc, plausible justifications that do not reflect genuine reasoning. This misleading behavior risks users overtrusting AI outputs, especially in sensitive contexts. The paper distinguishes between mechanistic explanations and these synthetic rationales, urging designers to incorporate features like uncertainty signals, structured responses, and external auditing to foster genuine critical reflection rather than illusionary transparency.

AI for education and learning requires active integration

AI in Higher Ed needs policies designed around balanced usage

Generative AI’s rapid uptake in universities has outpaced clear institutional guidance, creating uncertainty for both students and educators. Interviews with 26 students and 11 educators from two UK universities reveal that students employ AI in varied, context-sensitive ways—such as summarizing material, generating examples, and exploring topics—to boost efficiency, despite concerns over plagiarism, dependency, and skill erosion. Educators, meanwhile, see both promise and risk yet lack consensus on how to adapt policies and assessments. The study highlights a misalignment between student practices and educator expectations, calling for explicit training, revised assessment formats, and policies that align with real-world AI use while upholding academic integrity.

Note-taking plus LLM use balances learning and engagement (opens in new tab)

As students increasingly use large language models (LLMs) for learning, educators face urgent questions about how such tools affect core outcomes like comprehension and retention. We conducted experiments comparing LLMs, traditional note-taking, and combined approaches on student comprehension and retention. We found note-taking improved long-term retention and comprehension compared to LLM use alone, while combined note-taking and LLM use balanced comprehension benefits with student engagement. We identified “prompting archetypes,” capturing diverse strategies students used when interacting with the LLM. These behaviours correlated with learning outcomes, highlighting the importance of metacognitive prompting skills. This highlights the importance of actively involving learners in processing information rather than passively receiving AI-generated summaries.

AI may make programmers’ naming choices more predictable

We looked at how GitHub Copilot, an AI coding assistant, influences the names programmers choose for things like classes and methods in their code. Naming is important in programming because it helps others understand what the code does. Participants (N=12) completed coding tasks with Copilot turned on, turned off, or showing suggestions without letting them auto-accept. We found that when Copilot was active—even if its suggestions had to be typed out—people chose more predictable, less unique names. When Copilot was off, names were more varied and sometimes more descriptive. AI tools like Copilot can nudge programmers toward using common names, which might save time but reduce creativity or clarity. Future AI tools should offer more distinctive suggestions and help refine names over time. This could help balance AI’s speed with the human need for meaningful, understandable code.

People

The Tools for Thought team is interdisciplinary, mixing experts in social science, computer science, engineering, and design. The team is co-lead by Richard Banks and Sean Rintel.

Members

Sean Rintel

Senior Principal Research Manager

Richard Banks

Principal Design Manager

Advait Sarkar

Senior Researcher

Pratik Ghosh

Senior Research Designer

Martin Grayson

Principal Research Software Development Engineer

Britta Burlin

Principal Design Manager

Lev Tankelevitch

Senior Researcher

Payod Panda

Design Engineering Researcher

Viktor Kewenig

Cognitive Science Researcher

Leon Reicherts

Researcher

Collaborators

Abigail Sellen

Distinguished Scientist and Lab Director

Siân Lindley

Senior Principal Research Manager

Jack Williams

Senior Researcher

Christian Poelitz

Senior Research Engineer

Jake Hofman

Senior Principal Researcher