Microsoft Research 블로그

로딩 중…

Microsoft Research 블로그

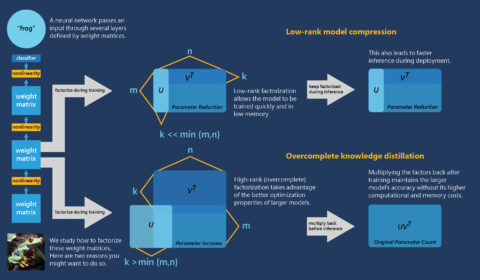

Factorized layers revisited: Compressing deep networks without playing the lottery

| Misha Khodak, Neil Tenenholtz, Lester Mackey, 그리고 Nicolo Fusi

From BiT (928 million parameters) to GPT-3 (175 billion parameters), state-of-the-art machine learning models are rapidly growing in size. With the greater expressivity and easier trainability of these models come skyrocketing training costs, deployment difficulties, and even climate impact. As…

Microsoft Research 블로그

Research at Microsoft 2020: Addressing the present while looking to the future

Microsoft researchers pursue the big questions about what the world will be like in the future and the role technology will play. Not only do they take on the responsibility of exploring the long-term vision of their research, but they…