Learning Language through Interaction

- Hal Daume III | University of Maryland

Machine learning-based natural language processing systems are amazingly effective, when plentiful labeled training data exists for the task/domain of interest. Unfortunately, for broad coverage (both in task and domain) language understanding, we’re unlikely to ever have sufficient labeled data, and systems must find some other way to learn. I’ll describe a novel algorithm for learning from interactions, and several problems of interest, most notably machine simultaneous interpretation (translation while someone is still speaking). This is all joint work with some amazing (former) students He He, Alvin Grissom II, John Morgan, Mohit Iyyer, Sudha Rao and Leonardo Claudino, as well as colleagues Jordan Boyd-Graber, Kai-Wei Chang, John Langford, Akshay Krishnamurthy, Alekh Agarwal, Stéphane Ross, Alina Beygelzimer and Paul Mineiro.

-

-

Chris Quirk

Partner Researcher

-

-

Series: AIFactory – France research lecture library

-

Keynote: Model-Based Machine Learning

- Christopher Bishop

-

AI and Security

- Taesoo Kim; Dawn Song; Michael Walker

-

Transforming Machine Learning and Optimization Through Quantum Computing

- Krysta Svore; Helmut Katzgraber; Matthias Troyer; Nathan Wiebe

-

AI for Earth

- Tanya Berger-Wolf; Carla Gomes; Milind Tambe

-

Social and Emotional Intelligence in AI and Agents

- Mary Czerwinski; Justine Cassell; Jonathan Gratch; Daniel McDuff; Louis-Philippe Morency

-

Microsoft Cognitive Toolkit (CNTK) for Deep Learning

- Sayan Pathak; Cha Zhang; Yanmin Qian; Chris Basoglu

-

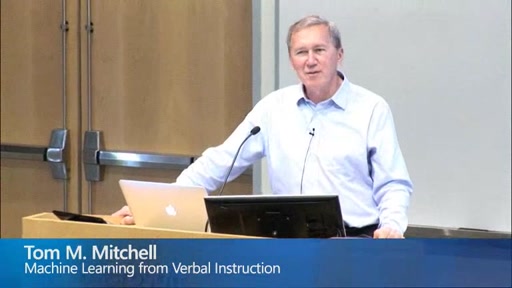

Machine Learning from Verbal Instruction

- Tom M. Mitchell

-

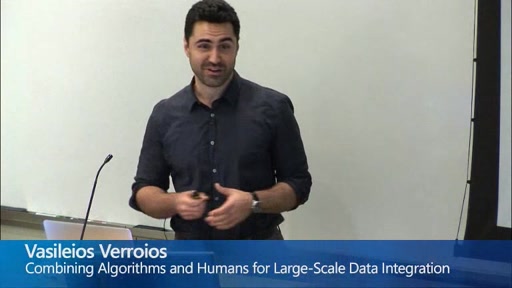

Combining Algorithms and Humans for Large-Scale Data Integration

- Vasileios Verroios

-

-

Learning Language through Interaction

- Hal Daume III