Contrastive explanations | G2: Make clear how well the system can do what it can do

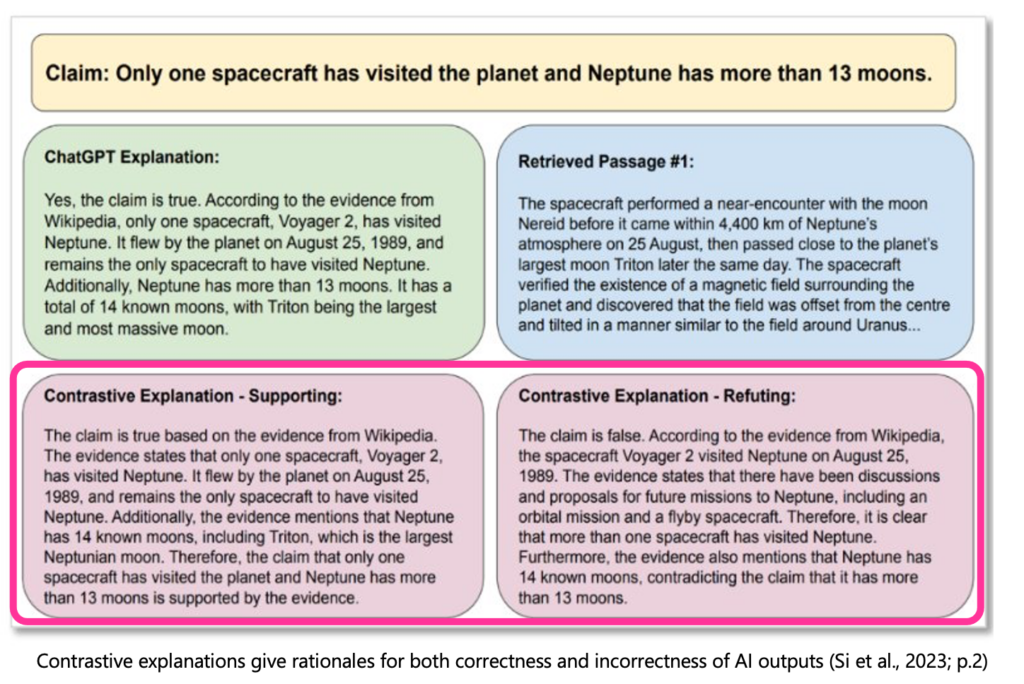

Contrastive explanations (Si et al., 2023) make clear how well the system can do what it can do (Guideline 2) by presenting output supporting and an output refuting an AI output.

The techniques used in this example have the potential to foster appropriate reliance on AI, by reducing overreliance. Keep in mind that overreliance mitigations can backfire. Be sure to test such mitigations in context, with your AI system’s actual users. Learn more about overreliance on AI and appropriate reliance on generative AI.